Table of Contents

Doing research intensive week

Latour / Science in Action

Science as a form of argumentation. Referring to research, binding yourself to a network as a rhetoric. // Seems like there is a whole other network created for climate change denial which is not the scientific one, perhaps a social media one, and that one also has a good rhetoric.

Water flow on a terrain metaphor. Research has set a brick wall to some flow directions. But the terrain can also change (the empirical reality) and erode those walls. Over decades the opinion aligns with the landscape (water canal is formed).

Positioning research to audience

Instrumental reason , who are the target research audience / journals? // Maybe my instrumental reason is just 'using' those methods to get the social change out.

What is new?

- Adding to line of research

- Disproving key data

- Showing by research what "all already knows" is not done before (validate an axiom with research).

- Introducing an idea of framework not known to the field

If you link to the wrong community, the new is not considered new.

Positioning can be implicit; Even the sentence structure implies on the position, using short sentences in natural sciences, longer in economy and much longer in humanities.

Positionining can be completely explicit; State exactly where you work, who you want to influence, what is more relevant and what is less.

60/20/20 PhD (or even 80/10/5)

- 60% build on old research

- 20% appropriate other research by analogy.

- 20% novelty.

Clever research: say as much as possible by saying as little as possible, using positioning yourself correctly in other people's work.

Field research

- Monotone description of what happened

- Interpretation

- Reflection

Background research and theory keep interplaying, careful not continue down inductive rabbit holes.

Path finding checks: Are theoretical constructs and/or research questions finding correlates in the empirical field?

Theory-Data relationship

Kurt Lewin: "There is no nothing more practical as a good theory" (it guides the practice).

- Capital T theory: Encompassing, intentional, conceptual system (Newtonian physics, evolution theory..)

- theory / theorizing: forming concepts and articulating relationships to better discuss empirical phenomena.

// One rather abstract and broad question: Do you think that in every type of theory, even the super specific analysis of slices of of flesh in rodents, we should attach a deduction/induction into why having this theory my contribute to social and moral good.

Types of theory

1) "What":

- Naming theory, description of the dimensions and characteristics of some phenomena.

- Classification: More elaborate naming with some structural relations (typologies, taxonomies, frameworks)

2) "How" and "Why" theories for understanding

- Defamiliarizing (deconstructing)

- Sensitizing

- Conjectures from real world situations

3) "What will be", theories for predicting.

4) "How to do" design and action theories

// My article: counts as "toward" a theory.

Sampling

How to get representative population? Has to mirror to compound of the population.

An Introduction to Qualitative Research / Uwe Flick

Observation and Ethnography

Ethnography has taken over "participant observation", where the researcher assimilates in the observed field. You can't accurately describe an event just by second-hand interviews and narratives, that would only give you somebody else's interpretation of an event. You have to be there to observe.

Five dimensions of observation:

- Covert / overt: How revealed is the observation to the participants // They don't differentiate between how the the observer is revealed in the situation and how much the participants are aware of observation even happening, but perhaps that options is anyway ruled out for ethical grounds.

- Non-participant / participant: How close is the observer to being active in the observed field.

- Systematic / Unsystematic: How much does the observation follow rules and schemes VS being flexible and spontaneous.

- Natural / Artificial: How much is the observed field created artificially or exists naturally.

- Self-observation: How much attention is given to reflection during observation and interpretation.

Non-participant observation

Refraining from intervention in the observed field. The Complete Observer (Gold, 1958) maintains a distance from events to avoid influencing them. You can try to distract the participants from the researcher (or camera // Big brother ), or you can be covert which is ethically contestable. Often covert observation does happen in public places where you can't inform passersby participants

Phases of observation:

- Selection of a setting: where and when.

- Defining what would be documented.

- Training the observers in order to standardize the focus.

- Descriptive observation: an initial presentation of the observed field. // Kind of like script writing

- Focuses observation: focus on what is relevant to the research

- Selective observation: "intended to purposely grasp central aspects" // This is actually only mentioned in the Participant Observation book of Spradely. Anyway the idea is to have these three stages like a funnel where you start broad and continuously narrow down your observations. 7 End: when further observations do not provide further knowledge. // Quite vague!

Problems:

How to observe without influencing? // See The Observer Effect (and the uncertainty principle) When the field is more public and unstructured, it means the researcher is less conspicuous and it is thus easier to observe without participating.

Case study: Leisure behavior of adolescents,

She didn't want to stand out or influence the events so she only wrote down the observations after they happened, on scrap paper, beer mats and cigarette boxes. This risks the observations being imprecise or biased, in favor of not influencing the the event (reactivity). The observations were complemented later by interviews. // Sounds like she was still quite a participant. Might seem weird otherwise if she just sits there drinking beer and saying nothing.

"Gendered nature of field work"

The authors note: moving around in public situations is more restricted to women due to dangers, and their perceptions are much more sensitive to those restrictions which makes them observe differently. Therefore it helps to use mixed-gender teams in observation. // 1973 calim from Lofland quoted in 1998 by Adler and Adler . Might be a bit outdated. Can think of other advantages / disadvantages for women

A further suggestion is "painstaking self-observation" of the researcher. Always reflect on the process, during the observation and after.

The topic is concluded to be a strategy "associated more with an understanding of methods based on quantitative and standardized research". It is in attempt to gain an external view of an event as it naturally occurs, while still being inside it, gaining an inside perspective, but also not being completely covert to avoid ethical problems. The authors advise to apply it "mainly to the observation of public spaces in which the number of members cannot be limited or defined."

Virtual ethnography

Markham 2004:

- Internet as a place: a cultural and social space in which meaningful human interactions occur.

- Internet as a way of being: CMC influences our behavior and way of being. // SIDE, Hyperpersonal, SIP etc. Self-extension to avatars, presence..

Research online can use the same method as ethnography such as participant observation. // One may think that in the internet it's easier to conduct non-participant observations because the technology allows for easy recording of the exact interactions, as if you are there. Literature refers to this also as 'nonreactive data collection' and 'unobtrusive methods'. [@fieldingSAGEHandbookOnline2008]

Suggestion for research questions on when observing the internet:

- How do the users understand the capacity and capabilities of the internet medium they are using?

- How does the internet affect social relationships in contract to real life?

- What are the implications of the internet for authenticity and authority? How are they judged?

- Is the virtual experienced radically different than the real?

// These are all very outdated questions that are and continuously and expansively studied in the literature.

Virtual communities (Rheingold 1993)

Social aggregations that emerge on the internet with a sustained web of interpersonal relationships.

Practicalities

Hine 2004: As in any ethnography, the ethnographer is required to have a "sustained presence in the field". But in this case, the field is virtual, boundless, and disembodied. The ethnographer could be physically located anywhere and hop in and out of the field as they wish. Without the ethnographers themselves using the medium for prolonged periods, they will not be able to contrive conclusions about it. Hine had problems in gathering information because she couldn't get good responses in newsgroup postings and her web-page as opposed to real life inquiries where she is more experienced in.

// Nowadays we also need to consider physical locations in virtual ethnography, for example Augmented/Mixed Reality, telepresence robots. And we also need to consider embodied approaches in the virtual space when observing Virtual Reality spaces.

It is possible to go beyond the formal communication medium itself and document more 'meta' data. For example screen recording of usage, and recording and sensing of the users while they are physically interacting with the medium. Since access to online participants' real life is limited, virtual ethnography may also be limited.

More up-to-date additions

Postill and Pink (2012) suggest that when conducting virtual ethnography in social media, the ethnographesrs must not restrict themselves to a particular community within a medium or even a particular medium. The heavy integration of hyperlinks, hashtags and references in pages and platforms such as Twitter,Facebook and Instagram keep on expanding the field of observation. The researcher should be mobile using those links just as users would be.

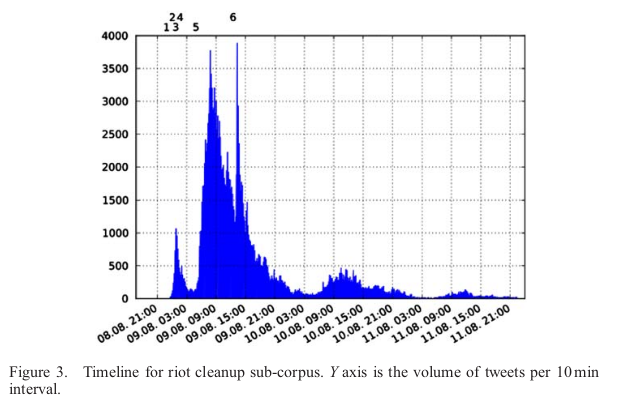

Marres in the book Digital Sociology suggests a distinction between two approaches: 1) Adopting existing sociological and anthropological methods to the digital realm, and 2) Coming up with new native digital methods, for example a pioneering research of big data analysis and visualization of twitter activity during the 2011 riots in UK:

// My thoughts: When doing ethnography online, we should also include human agents and non-human that are involved in the process of social media discussion, for example: Contend moderators, recommendation algorithms, platform developers.

Coding and categorizing

Qualitative Content Analysis

Categorizing material in order to reduce it.

Procedural steps:

- Define the material by selecting the parts that are relevant for the research.

- Analyze the situation of data collection (who, where..).

- Formal classification (transcription protocol, recording method) // Examples of methods?

- Direction of the analysis (what the researcher wants to interpret from the material) // Is this a place to estimate bias?

- Differentiating the already formed research question into sub-questions.

- Defining the analytical technique (Summarizing, Explicative, Structuring)

- Defining the analytical units:

- Coding unit: "the smallest element of material which may be analyzed, the minimal part of the text which may fall under a category"

- Contextual unit: "the largest element in the text which may fall under a category".

- Analytic unit: which passages "are analyzed one after the other."

- Analytic method is performed

- Re-assess categories based on the material. 10.Interpreting the results

- Application of the content analytic quality criteria // what is that? validity? what are the questions of validity?

// More updated in 2004 Mayring?

"Because of its systematic character, qualitative content analysis is especially suitable for computer-supported research (Huber 1992;Weitzman and Miles 1995; see 5.14). This is not a matter of automatized analysis (as in quantitative computerized content analysis), but rather of support and documentation of the individual research steps as well as support functions in searching, ordering and preparing for quantita tive analyses. In this connection the ATLAS.ti program, developed for the purpose of qualitative content analysis at the Technical University in Berlin, has proved to be of particular value (cf. Mayring et al. 1996)."

// Open source alternatives? RQDA, Taguette?

Summarizing content analysis

Reductions:

- Material is paraphrased and less relevant material is dismissed. // Who determines what is relevant (can ornamenting and repeating matter?)? How to not butcher meaning in translation to a "coherent level of language" ??

- Generalization of particular cases into more abstract // Aren't particular cases important?

- Similar paraphrases are grouped into one.

"therefore, I have already waited for it, to go to a seminar school, until I finally could teach there for the first time" --> "waited to teach finally" --> "looking forward to practice." --> "practice not experienced as shock but as big fun" // Huge change of meaning!

Explicative content analysis

Explaining things said by looking up definitions or further info. A narrow search looks for explanations in the text while a "wide content analysis looks for content outside of the text. // Explanations by definitions are too objective for subjective interviews! Assuming what somebody meant

Structuring content analysis

Defining internal structures, content domains, salient features (typifying) and defining scales. // Not explained thoroughly and only one example is given in the case of scales.

Contribution / Limitation

The method is very clean and organized, but it is also very reductive and may lose meanings. "Categorization of text based on theories may obscure the view of the contents rather than facilitate analyzing the text in its depth and underlying meanings." Explicative techniques risks in being too schematic and not going enough in depth to explain the text.

Thematic coding

When conducting group comparative study (Strauss 1987), _"a method which seeks to guarantee comparability by defining topics, and at the same time remaining open to the views related to them." // It does that because it doesn't try to create overarching themes over all of the groups in one short, but instead creates the themes for every single group and only then compares them.

Especially helpful with different social/cultural groups // but can it help otherwise?

Procedure:

Writing all case descriptions for the group interviews:

- A 'motto' statement for the interview.

- A short description of relevant demographic information.

- Summary of central topics that were brought up in the interview.

Deepening on every single case (difference with Strauss), first open coding (expressing data and phenomena as concepts), and then selective coding (formulating the one story of the case)

Strauss coding guidelines for phenomena:

1. Conditions: Why? Whit has led to the situation? Background? Course? 2. Interaction among the actors:Who acted? What happened? 3. Strategies and tactics:Which ways of handling situations, e.g., avoidance, adaptation? 4, Consequencer. What changed? Consequences, results?__

- Cross-checking the coding between the cases to increase comparability.

// Example lacks final results

Contribution / Limitation

In line with constructivist approaches. Suitable more for comparing between social groups, not for general analysis of phenomena. Can be time consuming!

Grounded Theory Coding (From Flick 5th edition)

Main reference: Glaser, Barney G., Anselm L. Strauss, and Elizabeth Strutzel. “The Discovery of Grounded Theory; Strategies for Qualitative Research.” Nursing Research 17, no. 4 (1968): 364.

Initial key components:

- A spiral of cycles of data collection, coding, analysis, writing, design, theoretical categorization, and data collection.

- The constant comparative analysis of cases with each other and to theoretical categories throughout each cycle. // What theoretical categories?

- A theoretical sampling process based upon categories developed from ongoing data analysis.

- The size of sample is determined by the “theoretical saturation” of categories rather than by the need for demographic “representativeness”, or simply lack of “additional information” from new cases. // Then can they be not representative? Isn't that a problem?

- The resulting theory is developed inductively from data rather than tested by data, although the developing theory is continuously refined and checked by data.

- Codes “emerge” from data and are not imposed a priori upon it.

- The substantive and/or formal theory outlined in the final report takes into account all the variations in the data and conditions associated with these variations. The report is an analytical product rather than a purely descriptive account. Theory development is the goal. (Hood 2007, p. 154, original italics)

After much debate, unifying integral aspects

- data gathering, analysis and construction proceed concurrently;

- coding starts with the first interview and/or field notes;

- memo writing also begins with the first interview and/or field notes;

- theoretical sampling is the disciplined search for patterns and variations;

- theoretical saturation is the judgment that there is no need to collect further data;

- identify a basic social process that accounts for most of the observed behavior.

Finding a Relevant Problem: Discovering or Constructing it

"aiming at developing a new theory, where so far a lack of theoretical knowledge exists". Whether it comes from gaps in existing research or personal experiences and timely events.

Getting Started: Using Sensitizing Concepts and Finding Relevant Situations, People, or Events

find concepts that refer to existing research and can guide the process. Then find your first case/situation to study,

Collecting or Producing Relevant Data

Debate. Does data:

- emerge in the field (Glaser),

- get collected by using specific methods (Strauss 1987)

- get constructed or produced by the researcher in the field (see Charmaz 2006).

"you should consider a strategy that can accommodate several forms of data" "you can use almost everything as data—whatever is helpful for understanding the process and the field you are interested in and to answer your research questions." // Trying to keep the student calm about these debates. Can we see example arguments? What kind of methods exist for making data out of anything?

Memoing: Producing Evidence through Writing

Not descriptive but inductive of existing data. "conceptualize the data in narrative form” (p. 245). Memo writing can include references to the literature and diagrams for linking, structuring, and contextualizing concepts. They may also incorporate quotes from respondents in interviews or field conversations as further evidence in the analysis." Good to immediately start writing a research diary. Memos can be extended not only to theory constructoin but also methodological and observed notes, or a personal diary.

Analysis through Coding

The main component of grounded theory and subjet of much debate.

Strauss and Corbin

Open coding: The first step. Data are segmented and attached to concepts."it is used for particularly instructive or perhaps extremely unclear passages." Every word/sentence/paragraph can be coded. ?/ assumptions are made about intention of the participants *how are those founded?. Possible sources of the codes can be from the social science literature or preferably straight from the expression of the interviewees - in vivo coding**. Concepts are generalized and grouped into categories. They are then dimensionalized, put into (linear?) scales such as a color's shade from dark to light. The researcher attempts to derive meaning from a text of the participants. Questions to consider when coding:

- What? What is the issue here? Which phenomenon is mentioned?

- Who? Which persons/actors are involved? What roles do they play? How do they interact?

- How? Which aspects of the phenomenon are mentioned (or not mentioned)?

- When? How long? Where? Time, course, and location.

- How much? How strong? Aspects of intensity.

- Why? Which reasons are given or can be reconstructed?

- What for? With what intention, to which purpose?

- By which? Means, tactics, and strategies for reaching the goal. // Mentions also the "flip-flop technique", comparing extremes of dimensions, and “waving-the-red-flag technique" of self-evidence example?

Axial coding: Identifitying links and hierarchies between categories/sub-categories Use of the paradigm model:

Context

|

|

Causes ---> Phenomenon ----> Consequences

|

|

Strategies (action/interaction)

// This should be expanded to systems thinking? Also what about the broader socio-material context

Selective coding: Abstracting and enriching the axial coding to end up with one central category and phenomenon along with its causes, consequences, strategies and context. Eventually you should be able to say: “Under these conditions (listing them) this happens; whereas under these conditions, this is what occurs” // This reminds a bit of mediator/moderator and quantitative analysis. Why not go so far?

Chronic illness study and trajectory phases, // how did they fit this into the paradigm model?

Glaser’s Approach: Theoretical Coding

Criticizes axial coding: "forcing a structure on the data instead of discovering what emerges as structure from the data and the analysis" Instead, uses coding families to categorize and also find new codes. The coding families are also analytical categories but they are more broad: causes, effects, stages, intensities, types, strategies, interactions, identity, turning points, social norms, social contracts, family.

// Question: This is all so humanistic, can these categories apply to nonhuman? Practices for the “New” in the New Empiricisms, the New Materialisms, and Post Qualitative Inquiry?

"As Kelle (2007, p. 200) holds, this set of coding families comes with a lot of background assumptions that are not made explicit, which limits their usefulness for structuring substantive codes"

Then again there is selective coding In the case study they were able to compare situations of awareness and mutual-pretense in different cases, between hospital context of dying patients and circus clowns. // Doesn't say what coding families they used

Charmaz’s Approach to Coding in Grounded Theory Research

"Charmaz suggests doing open coding line by line, because it “also helps you to refrain from imputing your motives, fears, or unresolved personal issues to your respondents and to your collected data”"

In line-by-line coding she translates the interviewee's words to her psychological analysis, then focuses on a few codes she found.

"All three versions discussed here treat open coding as an important step. All see theoretical saturation as the goal and endpoint of coding. They all base their coding and analysis on constant comparison between materials (cases, interviews, statements, etc.). Glaser retains the idea of emerging categories and discovery as epistemological principle. In contrast, Charmaz (2006) sees the whole process more as “constructing grounded theory” (hence the title of her book). All see a need for developing also formal categories and links."

Charmaz's study of chronically-ill men and their handling of loss of gender roles contributed a lot to research, but "it is neither entirely clear how far the sampling is based on theoretical sampling, nor clear about which of the coding strategies were used exactly to analyze the data" // Was more intuitive and constructive, maybe that's OK?

Limitations

- hazy, flexible method, difficult to teach

- endlessness of options for coding and comparisons (when to reach saturation?).

Naturally Occurring Data: Conversation, Discourse, and Hermeneutic Analysis

"approaches that focus on how something is said, in addition to the content of what is said,,,look at how an argument or discussion develops and is built up step by step, rather than looking for specific contents across the (whole) data set..order is produced turn by turn (conversation analysis), or that meaning accumulates in the performance of activity (objective hermeneutics),."

Conversation analysis

"actors, in the situational completion of their actions and in reciprocal reaction to their interlocutors, create the meaningful structures and order of a sequence of events and of the activities that constitute these events." // sounds much more phenomenological. Method:

- Turns at talk are treated as the product of the sequential organization of talk, of the requirement to fit a current turn, appropriately and coherently, to its prior turn.

- In referring … to the observable relevance of error on the part of one of the participants … we mean to focus analysis on participants’ analyses of one another’s verbal conduct.

- By the “design” of a turn at talk, we mean to address two distinct phenomena: (1) the selection of an activity that a turn is designed to perform; and (2) the details of the verbal construction through which the turn’s activity is accomplished.

- A principal objective of CA research is to identify those sequential organizations or patterns … which structure verbal conduct in interaction.

- The recurrences and systematic basis of sequential patterns or organizations can only be demonstrated … through collections of cases of the phenomena under investigation.

- Data extracts are presented in such a way as to enable the reader to assess or challenge the analysis offered.

// Can it be done for mediated conversations? Does it require also the physical experience of operating the interface? Big problems in turn-taking\

Toerien (2014, p. 330) lists four key stages of conversation analysis:

- Collection building;

- Individual case analysis; (How are turns designed, how is the sequence organized

- Pattern identification;

- Accounting for or evaluating your patterns.

"the principle is that the talk to be analyzed must have been a “naturally occurring” interaction, which was only recorded by the researcher. Thus conversation analysis does not work with data that have been stimulated for research purposes—like interviews that are produced for research purposes. Rather the research limits its activities of data collection to recording occurring interaction with the aim of coming as close as possible to the processes that actually happened, as opposed to reconstructions of those processes from the view of participant (e.g., reconstructions created through the interview process). This recorded interaction then is transcribed systematically and in great detail and thus transformed in the actual data that are analyzed." // Refers back to the problem of non-participant observation.

Emphasys is on context and sequence. // But how far should you go into the context? (Materialism...)

Limitations: Subjective meaning or the participants’ intentions are not relevant to the analysis.often get lost in the formal detail—they isolate smaller and smaller particles and sequences from the context of the interaction as a whole.

Discourse analysis

"Discourse analysis is concerned with the ways in which language constructs and mediates social and psychological realities. Discourse analysts foreground the constructive and performative properties of language, paying particular attention to the effects of our choice of words to express or describe something." // Reminds of habermas

"pays the same attention to what the interviewer says as to what the interviewees says" Also has coding. "analysis of psychological phenomena like memory and cognition as social and, above all, discursive phenomena."

// Question: How can we use discourse analysis to talk about the flawed post-truth political discourse happening right now, what are the problems? Habermas?

Questions to Address in a Discourse Analysis

- What sorts of assumptions (about the world, about people) appear to underpin what is being said and how it is being said?

- Could what is being said have been said differently without fundamentally changing the meaning of what is being said? If so, how?

- What kind of discursive resources are being used to construct meaning here?

- What may be the potential consequences of the discourses that are used for those who are positioned by them, in terms of both their subjective experience and their ability to act in the world?

- How do speakers use the discursive resources that are available to them?

- What may be gained and what may be lost as a result of such deployments?

// One more question to ask, who is allowed to participate in a certain discourse?

Foucauldian Discourse Analysis:

- The researcher should turn the text to be analyzed into written form, if it is not already.

- The next step includes free association to varieties of meaning as a way of accessing cultural networks, and these should be noted down.

- The researchers should systematically itemize the objects, usually marked by nouns, in the text or selected portion of text.

- They should maintain a distance from the text by treating the text itself as the object of the study rather than what it seems to “refer” to.

- Then they should systematically itemize the “subjects”—characters, persona, role positions—specified in the text.

- They should reconstruct presupposed rights and responsibilities of “subjects” specified in the text.

- Finally, they should map the networks of relationships into patterns. These patterns in language are “discourses” and can then be located in relations of ideology, power, and institutions.

Loook for constructions of agency, role, actor or victim. "the active agent versus the power of discourse to construct objects including the human subject". Emphasys on criticality. phenomena we as historically constituted, account of subjectivity into the research process, include the subjectivity of the researcher in forms of reflexivity.

Problem?: fuzzy and drifts away from more analytical origins.

Objective hermeneutics

"draws a basic distinction between the subjective meaning that a statement or activity has for one or more participants and its objective meaning. The latter is understood by using the concept of a “latent structure of meaning.”

"a sequential rough analysis aimed at analyzing the external contexts in which a statement is embedded in order to take into account the influence of such contexts...The central step is sequential fine analysis. This entails the interpretation of interactions on nine levels "

0. Explication of the context which immediately precedes an interaction.

- Paraphrasing the meaning of an interaction according to the verbatim text of the accompanying verbalization.

- Explication of the interacting subject’s intention (minor role).

- Explication of the objective motives of the interaction and of its objective consequences (context thought experiments, structure of interpretation, constructs stories about as many contrasting situations as consistently fit a statement.).

- Explication of the function of the interaction for the distribution of interactional roles (conversation analysis).

- Characterization of the linguistic features of the interaction (syntactic, semantic, or pragmatic).

- Exploration of the interpreted interaction for constant communicative figures (increasing generalization)

- Explication of general relations.

- Independent test of the general hypotheses that were formulated at the preceding level on the basis of interaction sequences from further cases.

The interpretation should focus on autonmous contigency,

"is necessary to rearrange the events reported in the interview in the temporal order in which they occurred. The sequential analysis should then be based on this order of occurrence, rather than the temporal course of the interview:"

Limitations

because of the great effort involved in the method, it is often limited to single case studies.

// Can this also be endless when talking about multiple meanings and contexts? Foucault rejects that type of hermeneutics because it only uncovers what is already known and not said. But it doesn't hypothesize on why, what caused these meanings, "what systematizes the thoughts"**

Comments from Anthropology contra Ethnography / Tim Ingold

Ethnography is not a method. It's an end (an account of people's lives and experiences).

The very idea of “ethnographic fieldwork” perpetuates the notion that what you are doing in the field is gathering material on people and their lives—or what, to burnish your social scientific credentials, you might call “qualitative data”—which you will subsequently analyze and write up. That’s why participant observation is so often described in textbooks as a method of data collection. And it is why so much ink has been spilled on the practical and ethical dilemmas of combining participation and observation, as though they pointed in different directions. There is something deeply troubling,as we all know, about joining with people, apparently in good faith, only later to turn your back on them so that yours becomes a study of them, and they become a case. But there is really no contradiction between participation and observation; indeed, you simply cannot have one without the other. The great mistake is to confuse observation with objectification. To observe is not, in itself, to objectify. It is to notice what people are saying and doing, to watch and listen, and to respond in your own practice. That is to say, observation is a way of participating attentively, and it is for this reason a way of learning. As anthropologists, it is what we do, and what we undergo. And we do it and undergo it out of recognition of what we owe to others for our own practical and moral education. Participant observation, in short, is not a technique of data gathering but an ontological commitment. And that commitment is fundamental to the discipline of anthropology.

//Perhaps if we saw ethnography not as an objectifying data collection, which is in itself a problem both for participant and non-participant, we can just see it for what it is. An authentic representation or interpretation of people's lives and experiences, which can be interpreted by the viewer in a number of ways, but not used as a case-study to make generalizations.

Notes on Ethnography from Strange Encounters / Sarah Ahmed

The translation of strange cultures to familiar terms, a creation of a stranger in order to destroy it.

Post-modern reflexive ethnography tries to not objectify the observed but instead go into dialog with them, make them co-authors // This is of course not possible at all in non-participant observation.

"To argue that there has been such a shift in the relation between ethnography and authority is to presuppose the possibility of overcoming the relations of force and authorisation that are already implicated in the ethnographic desire to document the lives of strangers."

// Is the ethnography authoritative by definition? The ethnographer is already in a position of power because it uses the experience of others to turn into knowledge relevant for their own field, for publishing in the journal, marking it as data etc. Perhaps would have been better to not conceal those power relations by pretending to be equals with the observed, and instead just reflect on them.

"postmodern fantasy that it is the ‘I’ of the ethnographer who can undo the power relations that allowed the ‘I’ to appear. Such a fantasy allows the ethnographer to be praised for her or his ability to listen well. So it remains the ethnographer who is praised: praised for the giving up of her or his authority. The event of recognition demonstrates that the ethnographic document still returns home in postmodernism, but that the returning home is concealed in the fantasy of being-together-as-strangers"

"Our task, in opening out the possibility of strangers knowing differently to how they are known, is to draw attention to the forms of authorisation and labour that are concealed by stranger fetishism. Such stranger fetishism is implicit in the assumption that the stranger is any-body we do not know, or in the assumption that we can transform the ‘being’ of strangers into knowledge. It is only by contesting the discourses of stranger fetishism, that we can open out the possibility of a knowledge that does not belong to the (ethnographic) community, even in the event of its failure to ‘know the stranger’."

// We shouldn't treat the stranger as one absolute stranger, that we are all strangers and all the same,we should recognize the stranger but more importantly the relations of power and production involved in observation, and try to challenge them.